| |

|

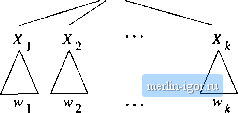

Строительный блокнот Automata methods and madness Leftmost derivation  Derivation -derivation Recursive inference Figure 5.7: Proving the equivalence of certain statements about grannnars Note that two of the arcs are very simple and will not be proved formally. If w has a leftmost derivation from A, then it surely has a derivation from A, since a leftmost derivation is a derivation. Likewise, if w has a rightmost derivation, then it surely has a derivation. We now proceed to prove the harder steps of this equivalence. 5.2.4 From Inferences to Trees Theorem 5.12: Let G {V.T,P,S) be a CFG. If the recursive uiference procedure tells us that terminal string w is in the language of variable A, then there is a parse tree with root A and yield ? . PROOF: The proof is an induction on the number of steps used to infer that w is in the language of A. BASIS: One step. Then only the basis of the inference procedure must have been used. Thus, there must be a production A w. The tree of Fig. 5.8, where there is one leaf for each position of w, meets the conditions to be a parse tree for grammar G, and it evidently has yield w and root A. In the special case that w = the tree has a single leaf labeled € and is a legal parse tree with root A imd yield w. INDUCTION: Suppose that the fact vj is in the language of A is inferred after n--1 inference steps, and that the statement of the theorem holds for all strings X and variables В such that the membership of x m the language of В was inferred using n or fewer inference steps. Consider the last step of the inference that ги is in the language of .4. This inference uses some production for Л, say A Xk, where each Xi is either a variable or a terminal. We need to prove these equivalences, and we do so using the plan of Fig. 5.7. That is, each arc in that diagram indicates that we prove a theorem that says if w meets the condition at the tail, then it meets the condition at the head of the arc. For instance, we shall show in Theorem 5.12 that if w is inferred to be in the language of A by recursive inference, then there is a parse tree with root A and yield w. - w - Figure 5.8: Tree constructed in the basis case of Theorem 5.12 We can break w up as wiw-i ivk, where: 1. If Xi is a terminal, then iw; - Xi; i.e., Wj consists of only this one terminal from the production. 2. If Xi is a variable, then Wi is a string that was previously inferred to be in the language of X;. That is, this inference about Wi took at most n of the n-\-l steps of the inference that w is in the language of A. It cannot take all n-\-l steps, because the final step, using production A X\X2 Xk, is surely not part of the inference about wi. Consequently, we may apply the inductive hypothesis to Wi and Xj, and conclude that there is a parse tree with yield v!i and root Xj.  Figure 5.9: Tree used in the inductive part of the proof of Theorem 5.12 We then construct a tree with root A and yield w, as suggested in Fig. 5.9. There is a root labeled A, whose children are Xi,X2,-.. ,Xk. This choice is valid, since A X1X2 X is a production of G. The node for each Xi is made the root of a subtree with yield wi. In case (1), where Xi is a terminal, this subtree is a trivial tree with a single node labeled Xi. That is, the subtree consists of only this chUd of the root. Since Wi - Xi in case (1), we meet the condition that the yield of the subtree is ш;. In case (2), X, is a variable. Then, we invoke the inductive hypothesis to claim that there is some tree with root Xi and yield Wj. Tins tree is attached to the node for Xi in Fig. 5.9. The tree so constructed has root A. Its yield is the yields of the subtrees, concatenated from left to right. That string is w1w2 Wkt which is w. □ We are now able to prove a theorem that lets us convert a parse tree to a leftmost derivation. The proof is an induction on the height of the tree, which is the maximum length of a path that starts at the root, and proceeds downward through descendants, to a leaf. For instance, the height of the tree in Fig. 5.6 is 7. The longest root-to-lcaf path in tiiis tree goes to the leaf labeled b. .Note that path lengths conventionally count the edges, not the nodes, so a path consisting of a single node is of length 0. Theorem 5.14: Let G = (V, T, P, S) be a CFG, and suppose there is a parse tree with root labeled by variable A and with jield w, where w is in Г*. Then there is a leftmost derivation A w in grammar G. PROOF: We perform an induction on the height of the tree. ifi fac-t, it is this property of being able to make a, string-for-variable substitution regardless of context that gave rise originally to the tprm context-free. Ihere is a more powerful classes of grammars, called context-sensitive, where replacements are permitted only if certain strings appear to the left and/or right. Context-sensitive grammarfi do not play a major role in practitf today. 5,2.5 From Trees to Derivations We shall now show how to construct a leftmost derivation from a parse tree. The method for constructbig a rightmost derivation uses the same ideas, and we shall not explore the rightmost-derivation case. In order to understand how derivations may be constructed, we need first to see how one derivation of a string from a variable can be embedded within another derivation. An example should illustrate the point. Example 5.13 ; Let us again consider the expression grammar of Fig. 5.2. It is easy to check that there is a derivation E Ibab As a result, for any strings a and .3, it is also true that qE/? = al(3 albp => aabp The justification is that we can make the same replacements of production bodies for heads in the context of a and as we can in isolation. For instance, if we have a derivation that begins E E + E E + (E), we could apply the derivation of ab from the second E by treating + ( as a and ) as 9. This derivation would then continue E+{E) E + iI)=>E + {lb) E+ {ab)

|